Comparing election forecast accuracy

How accurate are different prediction platforms on the US 2022 midterm elections?

Update: results and analysis are posted in my follow-up post here.

It's interesting to compare forecasts between different prediction platforms, but it's rare for them to have questions that are identical enough to compare easily. Elections offer one helpful opportunity.

I scored several prediction platforms on a set of 10 questions on the outcome of the 2022 US midterm elections: Senate and House control, and several individual Senate and gubernatorial races with high forecasting interest.

I compared these prediction platforms:

FiveThirtyEight (statistical modeling and poll aggregation)

Metaculus (prediction aggregator)

Good Judgement Open (prediction aggregator)

Polymarket (real-money prediction market)

PredictIt (real-money prediction market)

Election Betting Odds (prediction market aggregator)

Manifold (play-money prediction market)

(If there are any other forecasts you want to compare, just comment with a list of their predictions on each of the questions and I can add them fairly easily.)

For each prediction platform, I took the predicted probabilities on Monday evening, and computed the average log score on these questions. This is a measure of prediction accuracy - higher log score means better accuracy.

Important note: the election is much closer to one overall prediction than a set of independent predictions, because the races are highly correlated. The forecast that scores best is probably going to be the forecast that landed closest to the mark on the broader question of how much the nation overall went left or right, or how far left or right the polls were biased - and a large part of this is chance. So despite the large number of individual races, each election cycle can be thought of as roughly one data point, and to truly measure accuracy well, we'd need to run this experiment several times over different election cycles.

Results

Update: final results and analysis are posted in my follow-up post here.

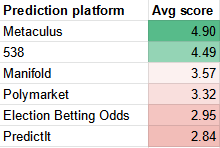

The preliminary results, scored based on all called races - the Georgia Senate race is pending runoff and therefore not scored currently:

The full results can be found in this spreadsheet: https://firstsigma.github.io/midterms22-forecast-comparison

These are transformed log scores: higher (green) means more accurate, lower (red) means less accurate. The max score is 10 and guessing 50% gets score 0. Predicting right gets a positive score, wrong gets a negative score. See below for more details on how the scoring rule works.

Analysis

My next post will go into more detail interpreting these results. You can read a preview of the post here or subscribe to my mailing list to receive it when it’s published.

Questions compared

I selected this set of 10 questions to compare across prediction platforms:

Senate control

House control

Senate races

Pennsylvania - Mehmet Oz (R) vs John Fetterman (D)

Nevada - Adam Laxalt (R) vs Catherine Cortez Masto (D)

Georgia - Herschel Walker (R) vs Raphael Warnock (D)

Wisconsin - Ron Johnson (R) vs Mandela Barnes (D)

Ohio - J. D. Vance (R) vs Tim Ryan (D)

Arizona - Blake Masters (R) vs Mark Kelly (D)

Governor races

Texas - Greg Abbott (R) vs Beto O'Rourke (D)

Pennsylvania - Doug Mastriano (R) vs Josh Shapiro (D)

As of today, all of these races have been called, except the Georgia race which goes to a runoff, so my results exclude Georgia. However, the overall accuracy rankings don't change much regardless of which candidate ends up winning Georgia - Metaculus still scores the best, closely followed by 538.

These were selected as races that had a high amount of interest across the prediction platforms. The main reason for using a limited set of questions is that not all prediction platforms made forecasts on all races - the main limiting factor was which questions were on Metaculus. (I did later find a couple more races on Metaculus, but did not add them to my list because I had already preregistered the question set.) Using a smaller set of questions also makes the data collection easier for me. Different lists of questions could have produced slightly different results, but because I chose them based solely on prediction interest (and not e.g. based on looking at who was predicting higher or lower), and because I preregistered the list in advance, I believe it results in an unbiased comparison.

They are not all highly competitive races - which is a good thing for looking at how accurate and well-calibrated predictions are across a range of high or low competitiveness races.

My methodology was preregistered in this post.

Scoring

The scoring rule I use is log score, with a linear transformation applied to make it easier to interpret - setting the maximum score to 10 (for a correct, 100% confident prediction); and the score for a guess of 50% to 0. So higher scores are better, and predicting the right outcome gets a positive score, and the wrong outcome a negative score. This is based on Calibration Scoring Rules for Practical Prediction Training.

I chose log score over Brier score (another common scoring rule) because of its many attractive properties described in this paper. Log score penalizes very confident wrong predictions much more than the Brier score, which I think is typically a better model for how bad we think errors are.

The original log score is log(predicted probability of the actual outcome). It's always negative, and higher (closer to zero) is better. To make it easier to interpret the log score, I used a linear transformation of the log score, using the practical scoring rule transformation described in the paper with the parameters described above (s_max = 10, p_max = 1).

Fine print on methodology

In the event that the winner of an election is not one of the current major-party candidates, I will exclude that race from the calculation. This is to normalize slightly different questions between platforms - some ask which candidate will win, others ask which party will win.

For 538, I use the forecasts on this page https://projects.fivethirtyeight.com/2022-election-forecast/, i.e. the Deluxe model. I also score the Classic and Lite models for comparison

For PredictIt, I compute the inferred Republican win probability as the average of Republican YES price and 1 - Democratic YES price. I do not use the NO prices (this is because the YES prices are what the platform highlights most prominently)

For Metaculus, I will use the Metaculus Prediction. I will also score the Metaculus Community Prediction for comparison.

For Manifold, there are often multiple questions on the same race, sometimes with slight differences in resolution criteria. I used only the prediction on the market featured on the main midterms map page https://manifold.markets/midterms.

Manifold has a separate instance for the Salem Center/CSPI Tournament which I will also compare. The market mechanics are the same but it uses a separate play-money currency and has a similar but different user base.

This tournament does not have a question on the Texas governor race. (I added it to my comparison after choosing the question set.) I will substitute the main Manifold's prediction there. (For the purposes of main Manifold to Salem Manifold comparison, this is equivalent to excluding this question, but this means there is a small caveat when comparing Salem Manifold to other platforms.)

I added Good Judgement Open to the comparison afterwards, and it did not have questions on the Ohio Senate race and the Texas Governor race. For both of those, I substituted the 538 and Polymarket prediction, which happened to be the same. (This means there is a small caveat when comparing Good Judgement Open to other platforms, except that when comparing to 538 and Polymarket it is equivalent to excluding this question.)

I collected most of the data by hand on Monday night - my spreadsheet also has links to the relevant forecasts, which have timelines of how the prediction changed over time, so you can see them for yourself. I collected the 538 forecasts by downloading their model outputs from https://github.com/fivethirtyeight/data/tree/master/election-forecasts-2022.

Disclaimer

I am a user and fan of all of these sites. I often read and make use of the predictions on all of them to better inform myself, both on the elections and on other topics. I also am a top-ranked Metaculus predictor, and the current top-ranked Manifold predictor. I predicted and bet heavily on the election on Manifold and substantially influenced the Manifold prediction as a result (as discussed in my analysis post), but my comparison methodology was done independently of my own predictions.

Further reading

Here’s another great comparison of midterm election results:

One previous election comparison which partly inspired this was this Metaculus comparison between 538 and PredictIt on the 2020 election.

For fun, I also made meta-predictions on which prediction platforms would be most accurate here:

Great post!