What can we learn from scoring different election forecasts?

Analyzing the accuracy of different prediction platforms on the US 2022 midterm elections

In my previous post I described how I compared the accuracy of election forecasts between different forecasting models and platforms. In this post, I'll dive into my interpretation of the results of this comparison.

The summary results are:

The full results can be found in this spreadsheet: https://firstsigma.github.io/midterms22-forecast-comparison

These are linearly transformed log scores: higher means more accurate, lower means less accurate. The max score is 10 and guessing 50% on everything gets score 0. See my previous post for more details on how the scoring rule works and why I chose it.

Don't read too much into prediction accuracy from just one election cycle

Firstly, as I warned in my previous post:

Important note: the election is much closer to one overall prediction than a set of independent predictions, because the races are highly correlated. The forecast that scores best is probably going to be the forecast that landed closest to the mark on the broader question of how much the nation overall went left or right, or how far left or right the polls were biased - and a large part of this is chance. So despite the large number of individual races, each election cycle can be thought of as roughly one data point, and to truly measure accuracy well, we'd need to run this experiment several times over different election cycles.

In the final section, I’ll also discuss some math on just how much the different scores on this one election mean - to summarize, you should probably only update how much you believe each platform over the others by about 10-40% - which is a pretty small amount. To put it another way, a very rough estimate is that you’d need to observe similar results 10-50 times to get enough confidence that the difference is statistically significant at p=0.05 (which is one common standard for academic studies). That's a lot considering that FiveThirtyEight has only been around for 8 election cycles!

Overall results

We can see that Metaculus (a prediction website and aggregator) scored highest, followed by FiveThirtyEight (which does poll aggregation and statistical modeling) - both beating the average of the platforms in this comparison. Next is Manifold, a play-money prediction market, with a near-average score. Scoring below that are the real-money prediction markets: Polymarket and PredictIt, as well as Election Betting Odds which aggregates a couple prediction markets together.

Going into the election, I considered 538 and Polymarket as sort of my "baseline" forecasts. 538 aggregates poll data into a statistical model, and its forecasts have a very strong track record. Meanwhile, prediction markets are probably the best reflection of a "consensus" forecast, because they attempt to factor in all available information - see Election Betting Odds's page on why prediction markets beat polls, for example. But not all prediction markets are equal - I considered Polymarket to be more reliable than PredictIt due to PredictIt's high fees and low trading caps.

The prediction markets all forecasted substantially more of a right lean than 538 - they predicted that the polls were skewed left, similar to the last 2 election cycles. This prediction matches what I typically saw both in mainstream media and among forecasters - I think the average prediction was that the polls would probably be wrong again in similar fashion, leading to a red wave, so it makes sense that the prediction markets reflected this prediction.

In this election cycle, it turned out that the polls were pretty accurate, and the polling bias and red wave predicted by the prediction markets did not materialize. As mentioned above, all the individual races are highly correlated, and much of the variation in the predictions can be explained by one overall prediction on the amount of leftward or rightward polling error.

FiveThirtyEight

FiveThirtyEight scored well, largely because its model stuck most closely to the polls. This 538 blog post describes the reasons they stuck more closely to the polls than the prediction markets. Some of the arguments in favor of believing the polls were that the pollsters already worked to adjust for known sources of polling bias; and historically polling bias hasn't been predictable so it's reasonable to expect that it's largely random noise. If true, this means that if we observe a large polling bias in the last one or two elections, we shouldn't just subtract that bias to try to correct for it - that would tend to make our predictions worse, not better.

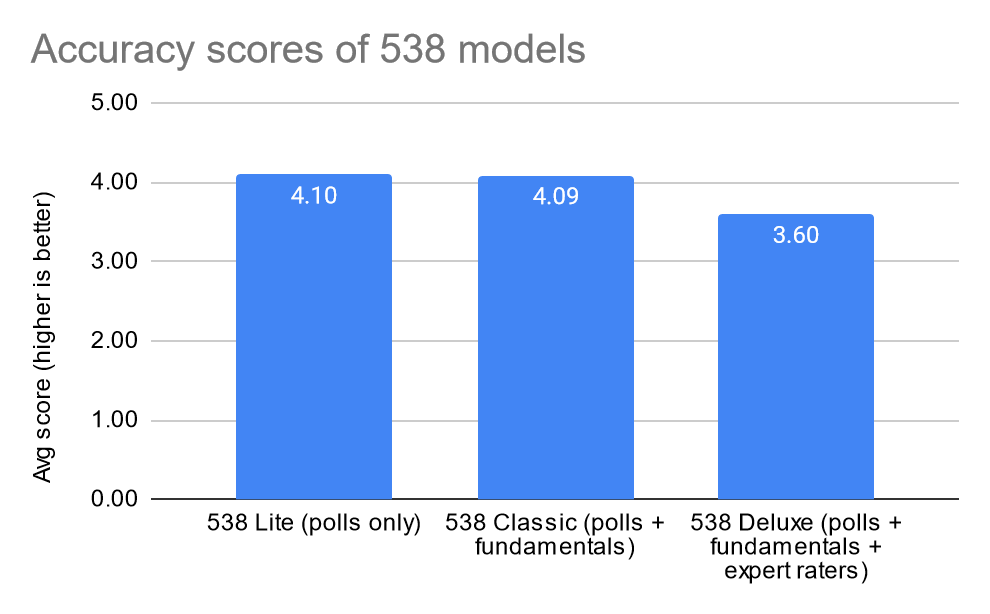

538's model is based on polls, fundamentals, and expert ratings - this is the Deluxe model, which is their default shown on the website. 538 also has a Classic model which is based on polls and fundamentals without expert ratings, and a Lite model based on polls only. In this election cycle, Classic outperformed Deluxe slightly, evidently because the expert raters pulled the prediction in the wrong direction. Of course, that doesn't necessarily mean that it's wrong on average to use the expert raters. Also, 538 discovered a data processing error in their Deluxe forecast post-election where they were accidentally using old expert rating data - and it appears that the corrected Deluxe forecast would have been closer to Classic forecast, so some of the gap between Deluxe and Classic is actually just a data error.

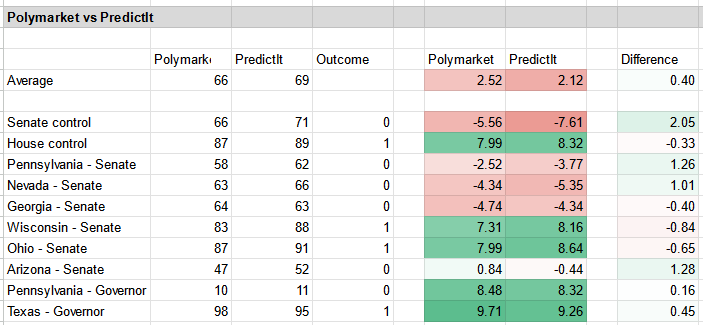

Here's a breakdown of how 538 scored compared to Polymarket (you can find more of these comparisons in my spreadsheet).

Prediction Markets

PredictIt is a well-known real-money prediction market, but it has much higher trading fees than other prediction markets like Polymarket, and low caps on the amounts each person can trade on each market, which reduces price accuracy - these effects can be seen in previous elections and other predictions. In this election, it also appeared to overall predict a stronger red wave than the other platforms, with a 5% higher prediction for Republican Senate control than Polymarket, and therefore its results turned out less accurate than Polymarket.

Polymarket is the prediction market I watched most closely because its high liquidity and volume and low trading fees mean I expected it to be the best representation of the aggregate of all predictions, in the sense that any significant edge in predictions elsewhere in theory ought to be priced in. I considered it my baseline for real-money prediction markets.

Election Betting Odds is an aggregate of a few real-money prediction markets. Its Congressional control markets average together PredictIt, Polymarket, and Smarkets; while its state-level races are based on PredictIt only. I included it in the comparison out of interest, but I did not expect it to be a better prediction than Polymarket, because it seems to me that averaging in Predictit (with its higher fees and therefore presumably lower accuracy) would tend to hurt the average prediction, not help. Moreover, on a single election cycle where we are scoring highly correlated races, we'd expect Election Betting Odds to score roughly in the middle of the prediction markets that it averages - which is in fact what we observed.

Manifold is a play-money prediction market - it functions similarly to real-money prediction markets except with a play-money currency. (It's actually a bit of a hybrid: traders can buy play-money currency with real money, and they can donate play-money currency to charities at the same rate.) Manifold predicted closer to 538 than the other prediction markets, and therefore ended up being more accurate than them.

Differences between prediction markets

In the weeks leading up to the election, we observed a significant discrepancy between the forecasts on PredictIt, Polymarket, and Manifold - i.e. substantially different prices for the same questions. In an efficient market, different prediction markets should converge closely, because a) sufficiently large differences can be arbitraged, and b) even differences that are too small to efficiently arbitrage after trading fees should still cause traders to tend to buy more on the underpriced market and sell more on the overpriced market, leading towards price convergence. In practice, a combination of high fees and practical differences and difficulties means that prediction markets can sometimes diverge significantly, such as in these midterms forecasts.

The PredictIt vs Polymarket difference is due to PredictIt having high trading fees and limits on how much each user can trade on each market; and substantial differences in user base. PredictIt made substantially more Republican-leaning predictions than Polymarket, suggesting that PredictIt's user demographics may be more right-biased than Polymarket's. This is likely because in 2016 left-leaning users were more likely to bet on Clinton, lose big, and leave the site; while right-leaning users were more likely to bet on Trump, win big, and bet more on PredictIt - see Scott's discussion (control-f for PredictIt).

Real vs play money

A fundamental reason Manifold is different from Polymarket and PredictIt is that it uses play-money currency instead of real money. So it can diverge from real-money prediction markets if its users tend to predict differently, and the differences can't simply be arbitraged away.

There are strong reasons to expect real-money prediction markets to outperform play-money ones - real-money prediction markets are typically expected to provide a much bigger incentive to get your predictions right, and in principle if traders noticed that a play-money prediction platform had any edge, they could and would price that into the real-money prediction markets, the same way the real-money prediction markets should (in theory) be pricing in any edge that 538 has.

On the other hand, there are some structural advantages to play-money prediction markets. For example, play-money markets have reduced price distortion because real-money earnings are taxed while play-money earnings are not. Play-money markets may also be more broadly accessible. And perhaps most importantly, the amount of funds predictors have on play-money markets is more closely related to their prediction track record than the funds available to predictors on real-money prediction markets - so play-money prediction markets may be better able to weight good predictors.

How Manifold did better, and why much of it may be noise

So, those are some general reasons why real and play money prediction markets can diverge, and why they each might have advantages. Let's now look specifically at where the Manifold election predictions came from and why they were different than the other prediction markets. I happen to know a lot about this, because I am one of the largest traders on Manifold and spent a lot of time predicting on the Manifold election markets.

Based on digging into the trading patterns on the election markets, I concluded that the largest chunk of the funds traded on them was primarily driven by one of two simple trading strategies: bet towards 538, or bet towards the real-money prediction markets (Polymarket and PredictIt). This shouldn't be too surprising, and of course there were also lots of predictions based on other types of information and analysis, but it makes sense then that on most (but not all) of the predictions, Manifold ended up somewhere in between 538 and Polymarket.

Of course, there were almost certainly traders on Polymarket betting towards 538 too, but it appears that on Manifold that accounted for a larger proportion of funds. In fact, I personally was the trader who bet the most on this prediction that 538 was more accurate (e.g. I held by far the largest position on the Democrats winning the Senate because of this), and this moved the Manifold probability substantially closer to 538. This resulted in Manifold outperforming other prediction markets, because 538's predictions turned out to be more accurate.

A lot of that should probably be thought of as random noise because the decisions of one large trader (myself) appeared to have an outsize impact on the Manifold predictions. Manifold is much newer than PredictIt and Polymarket, with a smaller (although fast growing) user base; and we'd typically expect smaller platforms to make less accurate and noisier forecasts because they are aggregating fewer predictions together. The observation that Manifold did better in this election forecast is a small amount of evidence about its market working better for some reason (see below for analysis of some possibilities), but probably a larger amount of evidence about noisiness of smaller markets.

Manifold also has a separate prediction market instance for a tournament run by the Salem Center/CSPI - I'll refer to it as Salem Manifold here. The market mechanics are essentially the same, but it uses a separate play-money currency, and has a similar but different user base. The Salem Manifold predictions were dramatically different than the Manifold predictions - e.g. for Republic control of the Senate, Salem Manifold predicted 74%, higher than any other platform in my comparison, while Manifold predicted 58%, almost the same as 538. Correspondingly, Salem Manifold ended up with the worst accuracy score in my comparison. My takeaway here is that the market mechanics are important (things like low trading fees, good order execution and market-making, as well as ease-of-use), but differences in the userbase can be far more important, as that was the main difference between Manifold and Salem Manifold. This is also another argument to interpret the differences between Manifold vs Salem Manifold vs other prediction markets as substantially driven by noise - e.g. whether a small number of big traders are predicting one way or the other.

Again, we shouldn't read too much into Manifold outperforming the real-money prediction markets here as general evidence about play-money vs real-money prediction markets, because it's just one data point and it could easily have looked very different with small perturbations to the user base, such as we saw on Salem Manifold.

Prediction Aggregators

Metaculus is a prediction platform where users make predictions and are scored on their accuracy, receiving points for more accurate predictions. Metaculus aggregates the predictions together using a model that calibrates and weights them based on factors like recency and the predictors' track records, to produce an aggregate prediction (called the Metaculus Prediction) that's ideally better than the best individual. Metaculus also provides a simpler and "dumber" aggregate called the Community Prediction, which is simply the median of recent predictions - I also included this for comparison.

Good Judgement Open is another prediction platform and aggregator which works similarly. In my analysis here, I'll focus on Metaculus because I'm more familiar with it, and because its high score is most interesting to examine closely.

How Metaculus performed

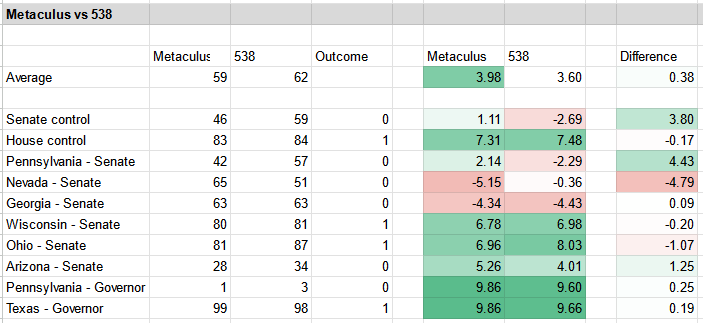

Metaculus made by far the lowest (and therefore most accurate) predictions for Republican Senate control. But interestingly, it didn't just predict better chances for Democrats across the board. Metaculus had the biggest wins (in terms of outperforming the other predictions) on the Arizona Senate race and overall Senate control. Meanwhile, the Metaculus predictions on Nevada and Georgia were much closer to Polymarket than 538, i.e. predicting higher Republican chances than 538, and these turned out to be less accurate - but Metaculus's score losses here were lower than its gains elsewhere. Metaculus also made the most confident predictions on non-competitive races like the governor races in Pennsylvania and Texas, although those only added a tiny amount to its accuracy score.

It’s not clear to me why exactly Metaculus predicted differently than the prediction markets and 538 - I’d love to hear your thoughts. Interestingly, the Metaculus prediction (which aggregates together predictions with a model based on the predictors' track records) did much better than the Metaculus community prediction (which is just a simple median of predictions). For example, on Republican Senate control, the Metaculus prediction was 46% while the community prediction was 60%. I don't have any specific knowledge as to why, but I would guess this is because forecasters with strong track records on Metaculus tended to (correctly) predict better chances for Democrats on those questions.

Good Judgement Open (GJO) did not score quite as well as Metaculus or 538, but still scored better than the prediction markets. Its predictions were broadly similar, but it lost points vs 538 on the Nevada Senate race, while it lost points vs Metaculus on Senate control. I am interested if anyone has a deeper analysis of differences of Metaculus vs GJO, because they work fairly similarly. It's interesting to note that GJO and Metaculus's community prediction scored very similarly, perhaps suggesting that GJO's crowd forecast did not gain the advantages of Metaculus's prediction aggregation model.

Metaculus vs prediction markets

Compared to prediction markets, prediction aggregators like Metaculus are similar in that they aggregate many people's predictions together. However, they do this by a mathematical formula rather than by people trading in a market.

One key difference from prediction markets is that users simply predict their beliefs without needing to think about trading mechanics on a market. This can be more natural to some people, while other people may find betting odds more intuitive.

Prediction markets weight users based on how much funds they invest. Investing more funds tends to be correlated with being more confident in a prediction, and also weakly correlated to having a more successful track record. The correlation is weak because how much funds predictors have is mostly based on their jobs etc and not much related to how good they are at predicting.

Metaculus can directly weight predictions based on users' track records, which should be a big advantage compared to markets. But one downside is Metaculus has no direct way to place different weight on a user who submits a prediction in 2 seconds on blind instinct vs a user who has spent months modeling the elections, whereas on prediction markets one would expect the former to bet much less than the latter.

Predictions over time

The comparison I did here was based only on the forecasts the day before the election. It would also be interesting to compare how the forecasts did weeks or months before election day (the analysis would be the same, but it would take additional data collection). This is speculation/hypothesis, but I predict (with low confidence) that the FiveThirtyEight forecasts months before the election may be less accurate than other predictions, because one may be able to predict future changes in the polling landscape better than just based on simple models of fundamentals. For example, many predicted that the Democratic bump over the summer would taper off by election day, which is in fact what happened. I also hypothesize that FiveThirtyEight's forecasts may become more accurate relative to the average forecast as the election draws nearer. Scoring Midterm Election Forecasts - by Mike Saint-Antoine has a comparison for 0, 1, and 2 weeks before the election, but it would be great to also compare longer in advance.

How much do these results mean?

This is important, so I'm going to repeat it: scoring one election cycle is basically just one data point on the prediction accuracies. It's like judging a weather forecast's accuracy based on whether it was right on just one or two days - if one forecast said 10% chance of rain and it happened to rain that day, then it would score much worse than another forecast that said 20% chance of rain, but you'd need to observe 10+ similar days to have a reasonable idea of which was more accurate.

To estimate how much we should update our beliefs based on this election, let's do a simplified calculation based on analyzing just the Senate control forecast, since this wraps up all the correlated individual Senate races. If we tried to do the same calculation with all the prediction questions, we’d need to handle the fact that they are highly correlated somehow (I don’t know how). So by focusing on the single Senate control question, we get a lower bound on how much we should update our beliefs, and I think calculating based on a single question is much closer to the truth than calculating based on the sum or average scores on all the questions together.

One way we can think about this is in terms of Bayesian updates. If we use a model where we started off believing that one of the models was the ground truth, and they were all equally likely, then after observing the result our Bayesian estimate of how likely each one was to be right is multiplied by a ratio of <predicted probability of Democrats winning Senate> / <average predicted probability of Democrats winning Senate>.

Another way to model this is to calculate how much money you’d gain/lose if you bet one set of forecasts against the others. Specifically, if you made Kelly bets (information-theoretically optimal bets) at one forecast’s probabilities against each of the other forecasts. The same ratio (<predicted probability of Democrats winning Senate> / <average predicted probability of Democrats winning Senate>) turns out to be how much funds you end up with. (See this post for an explanation of the math; or see also Metaculus’s tournament scoring rule which is based on the same math.)

Note that amount of funds is meaningful because in a prediction-market context, it's how much your future predictions are weighted in a prediction-market context - so this ratio is essentially how much a prediction market updates your weight after observing an outcome. (This isn't too surprising - Kelly betting has deep theoretical ties to information theory, so it’s actually a statement about how much relative information each forecast has, in addition to a statement about betting profits - hence why we get the same ratio from a Bayesian model and a betting model.)

This is based only on one data point (Senate control), but it gives us a ballpark estimate that we should believe Metaculus 39% more, 538 7% more, Manifold 8% more, Polymarket 13% less, PredictIt 24% less (compared to the average of them).

Do note that these are fairly small updates. To put it another way, a very rough estimate is that you’d need to observe similar results 10-50 times to get enough confidence that the difference is statistically significant at p=0.05 significance. That's a lot considering that FiveThirtyEight has only been around for 8 election cycles! It would be inaccurate to take away as a stylized fact that "538 is more accurate than prediction markets" or "prediction aggregation without real money does better than real-money prediction markets". This is only a small amount of evidence about those statements.

More forecasting comparisons to come!

Still, even though the accuracy comparison is very limited by being about just one election cycle, I think we can learn a lot from observing their different predictions and trying to interpret how they came to make different predictions. And of course, we can apply the same sort of comparison analysis to many other predictions - not just elections - and hopefully get more data to learn more about forecasting.

The scoring itself is fairly easy to scale to more predictions, the main work is getting the data - both the work to collect the data from known questions, and the difficulty of finding identical questions to compare, since different prediction platforms often have forecasts on the same topics, but often with slight but significant differences in the questions which make them incomparable. But there are enough questions that do exist across multiple platforms that more of these comparisons are definitely possible, and I hope to see more of them in the near future! One thing I'm looking forward to is the annual tradition of making predictions for each year - some of us are working to standardize the prediction questions a bit more to enable more comparisons like this.

Loved this post! Is it possible for you to do the same analysis for other elections?